Making MCP better

MCP has become very popular the year since it was launched but there are serious technical limitations. Can we make it better?

This post by Alex Albert - Head of Developer Relations at Anthropic lines up with the major developments that have occurred in AI over the past few years. The prediction for 2026 was intriguing and left room for speculation as to what exactly he meant. Yesterday, Anthropic posted a technical blog on Code Execution with MCP that shed light on the company's thinking.

MCP has been a huge success in terms of development mindshare since it was released in 2025. This I think is due to it being a bridging technology between AI compute and traditional compute and data. Enterprise AI initiatives are only possible if there is a way to bring the data from traditional systems into LLM workflows. In this respect, you could add MCP on an architectural diagram to show how you would connect AI workflow with your other systems - similar to how you connect systems together using REST. Everybody gets MCP - from developers to the C-suite - it has become part of the vernacular.

The Anthropic blog highlighted a couple of issues with the current implementation of MCP.

- Tool definitions are added to the context window.

- Intermediate results are added to the context window - often multiple times.

The first issue is that the entire tool definitions for an MCP server are loaded into the context window and sent on every LLM call. This could cost a few thousand tokens. The second issue is that if you load an MCP server with a tool for reading a Google Drive document and a second tool for analyzing that document and that document is 50k tokens, then the LLM sees the 50K twice.

As a result of these and other design flaws in MCP - way more tokens are being used than necessary. The alternate approaches shown in the Anthropic blog was able to reduce token use from 150,000 tokens to just 2,000 tokens. Tokens mean energy, and as the AI boom continues optimizations like these are just as important as new data centers and energy infrastructure.

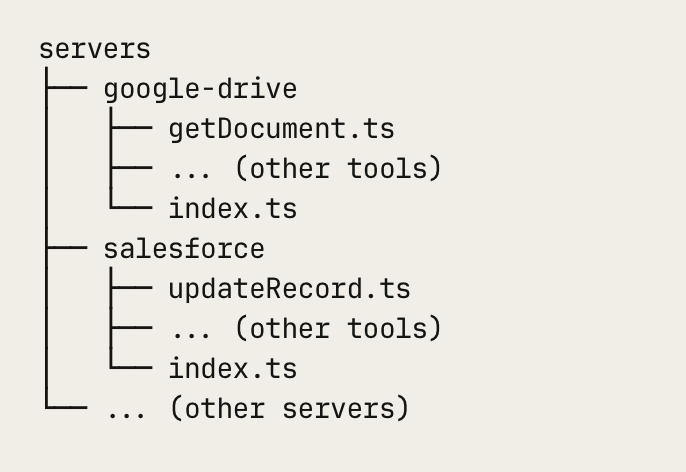

The solutions involve moving the tool calling out of the LLM's context. Instead, the LLM uses the MCP server as documentation of the tools that are available, generates code, and then the code can be executed in an environment. Some of that code could also exist as functions on the filesystem rather than being generated during an LLM call.

This collection of approaches has also been promoted by Cloudflare in their blog about Code Use. But Anthropic had also been hinting about another possible direction with Claude Skills and a lot of the solutions in this MCP article take from their approach with skills such as progressive loading of contacts and delegating to tools on the environment.

Other technologists have proposed using CLIs as alternatives to MCP. It turned out to be surprisingly effective to simply describe which CLI tools are available to the LLMs and allow even more self-discovery by calling the --help function. And well-designed command line programs are composable in a Unix-style way that's not possible with MCP. So if you're building a tool from scratch, one recommendation is that it's cheaper and more effective to just make a good CLI, and if it is well-designed, you can then add MCP on top.

Having said this, then what is the future of MCP - will it continue to rise in popularity? There are already tools like Maxime Rivest's mcp2py , which can convert any MCP into a Python library or mcporter which can do similar but with Typescript. So has the great MCP migration of 2026 started?

My guess is that the constraining factor will be token economics - there won't be, at least in the medium term, the energy to sustain the hyperbolic growth in AI compute. This will result in context being treated as a scarce resource, in a similar way to RAM being scarce relative to disk, leading to specific software design patterns. You will still have MCP, but it will be refactored in a way that's more efficient for of context management.

Conclusion

MCP is changing for the better as we learn to manage context more efficiently, and as we improve our understanding of which technique to use in which specific circumstance. We now can use MCPs as documentation, we can use code generation, we can use functions on disk, and we can use the new skills API.

This leads into the direction of EdgarTools. There is now a basic MCP implementation, but I have not been convinced enough in it as a technology to implement more than a couple APIs and workflows. In the last couple releases, I have added EdgarTools Skills with the ability to install into Claude Code and Claude Desktop. I'm sufficiently convinced in this as the better approach that I've spent a lot more time in a couple releases expanding the skills and expanding the documentation.

Edgartools is the most comprehensive open source library for navigating SEC filings. Visit and star the Github repo, and install and try it using pip.