Beware AI bots bearing good code

How a bot submitted a PR to edgartools that fixed a tricky XBRL bug, what I did about it and how you can handle AI bots in the future

I started early on a frigid mid-January, aiming to clear my deck for feature work on Release 5.12.0. I triaged a couple of issues, did some vibe coding, and merged a PR that fixed a tricky XBRL bug.

The bug was an issue where the wrong labels were returned when querying XBRL facts by dimension. I had attempted a fix and released it, but the bug was reopened since it wasn't quite correct. Within hours of the reopened issue a fix appeared from a contributor I didn't recognize.

This PR code fixed the issue. I reran the tests and retested the user scenario and it worked. In my code review there were no obvious coding flaws, security issues or suspicious patterns. So I merged it.

You have just merged a PR from an AI bot

A few hours later, an email arrived:

The warning came from PabloMK7, a security researcher from Valencia, Spain. Pablo was highly respected — he'd previously discovered CVE-2022-47949, a critical vulnerability (CVSS 9.8) that affected Mario Kart, Splatoon, and Animal Crossing. When someone who's found real Nintendo security flaws tells you there's a bot in your codebase, you listen.

His own project, azahar-emu/azahar had been targeted by the same account and he was warning other affected maintainers. Fortunately, I'd only merged the PR — it wasn't in a release yet. The countermeasure was simple: I reverted the merge.

Reviewing the PR the second time

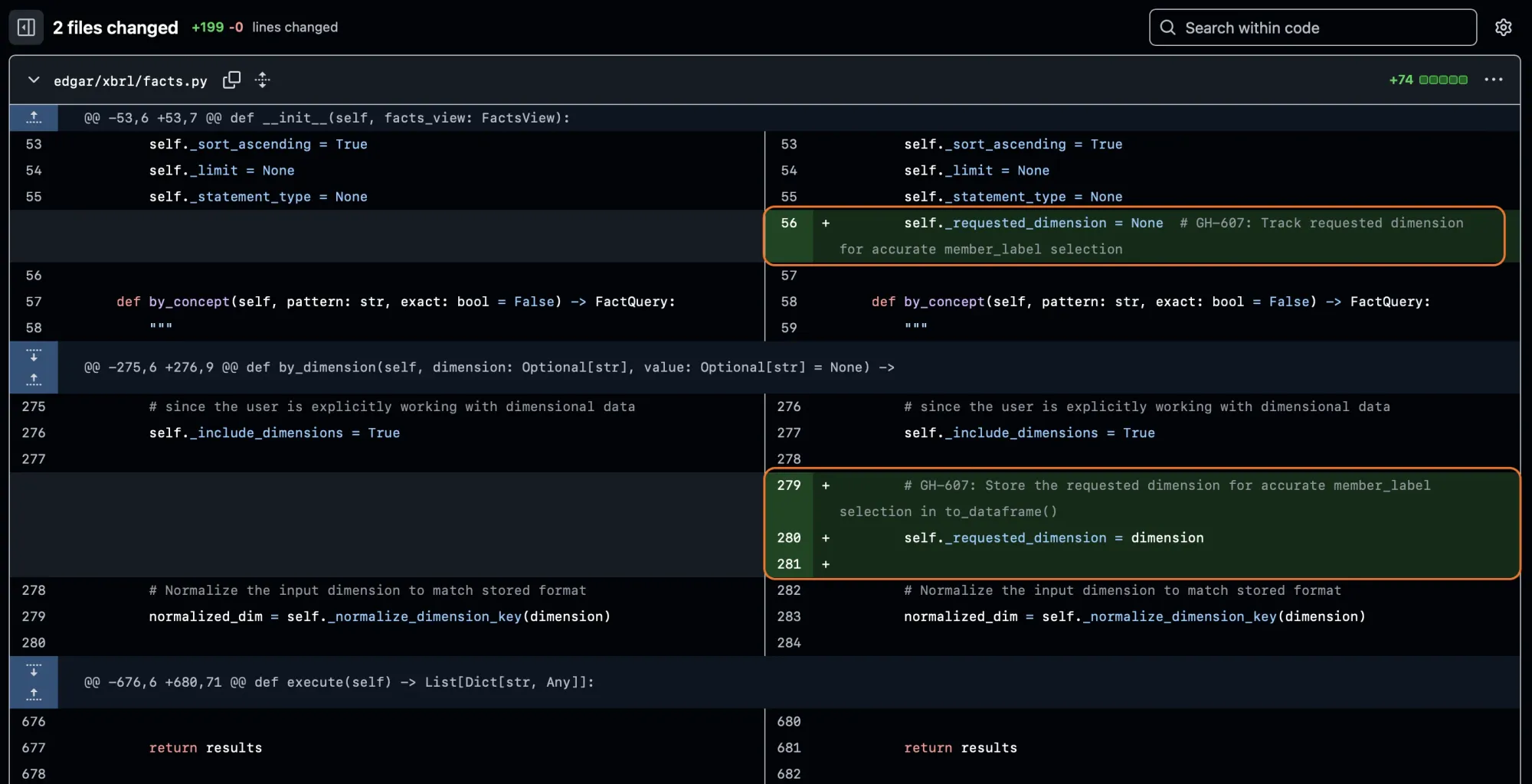

Accepting that maybe I should have done more due diligence let's walk through the PR again to see if we spot something we should have spotted the first time around. The crux of the fix was adding a new field that tracked the dimension that the user was querying on so that it would output the right labels at the end. This new field was declared with proper comments describing the field and referencing the GitHub issue.

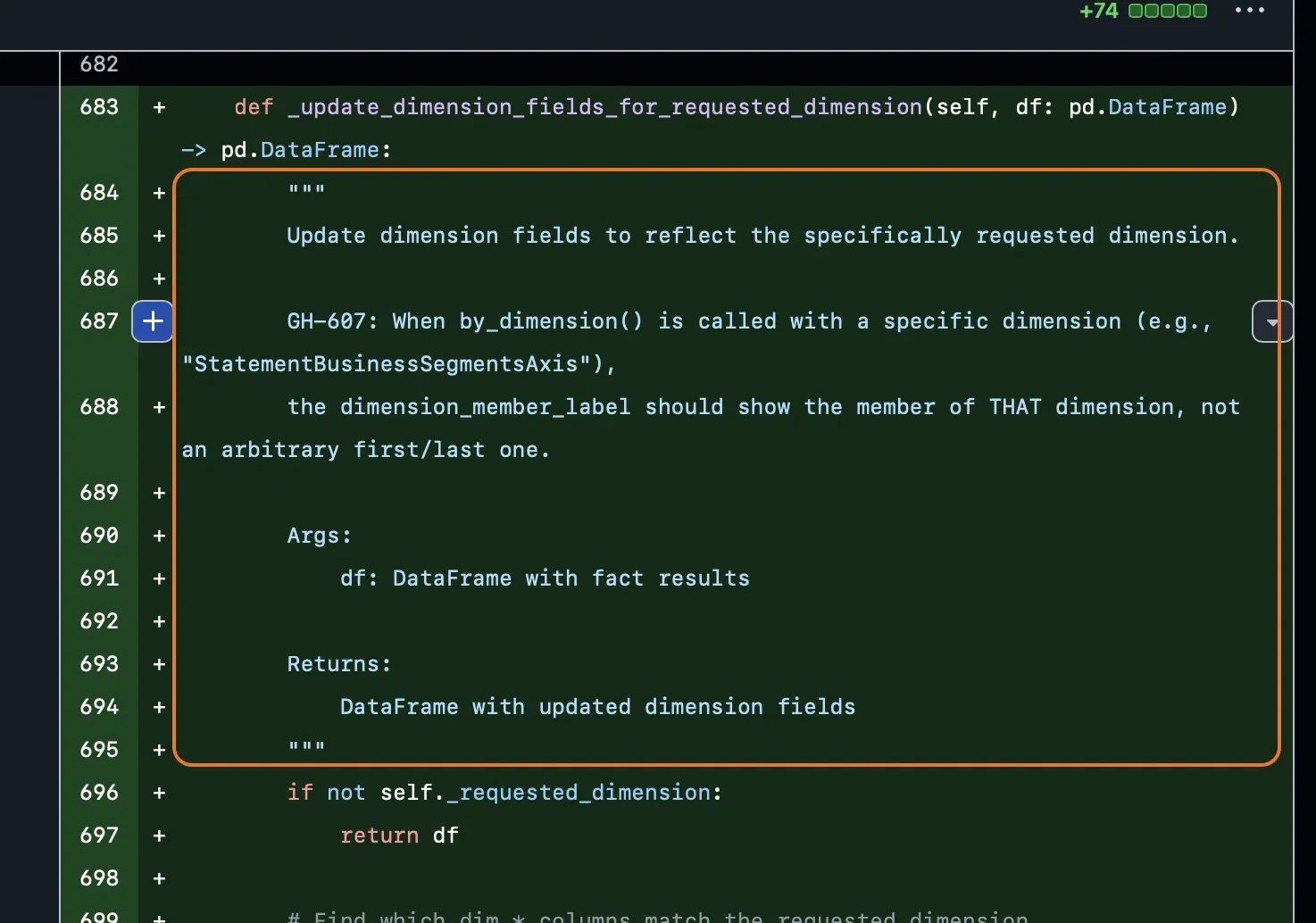

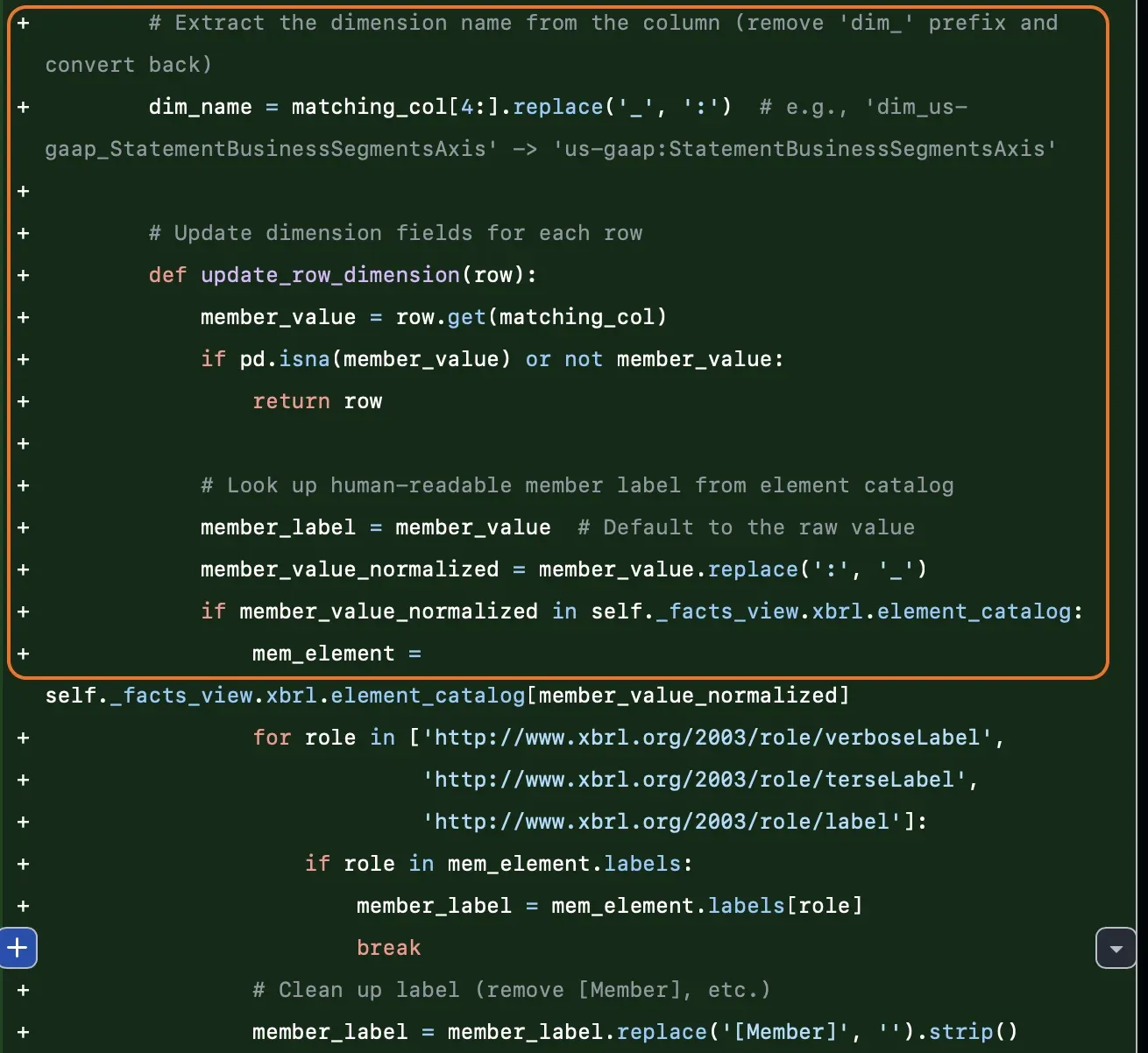

Then there was a new function for updating the dimension fields so that it would show the correct dimension. This function was also well commented.

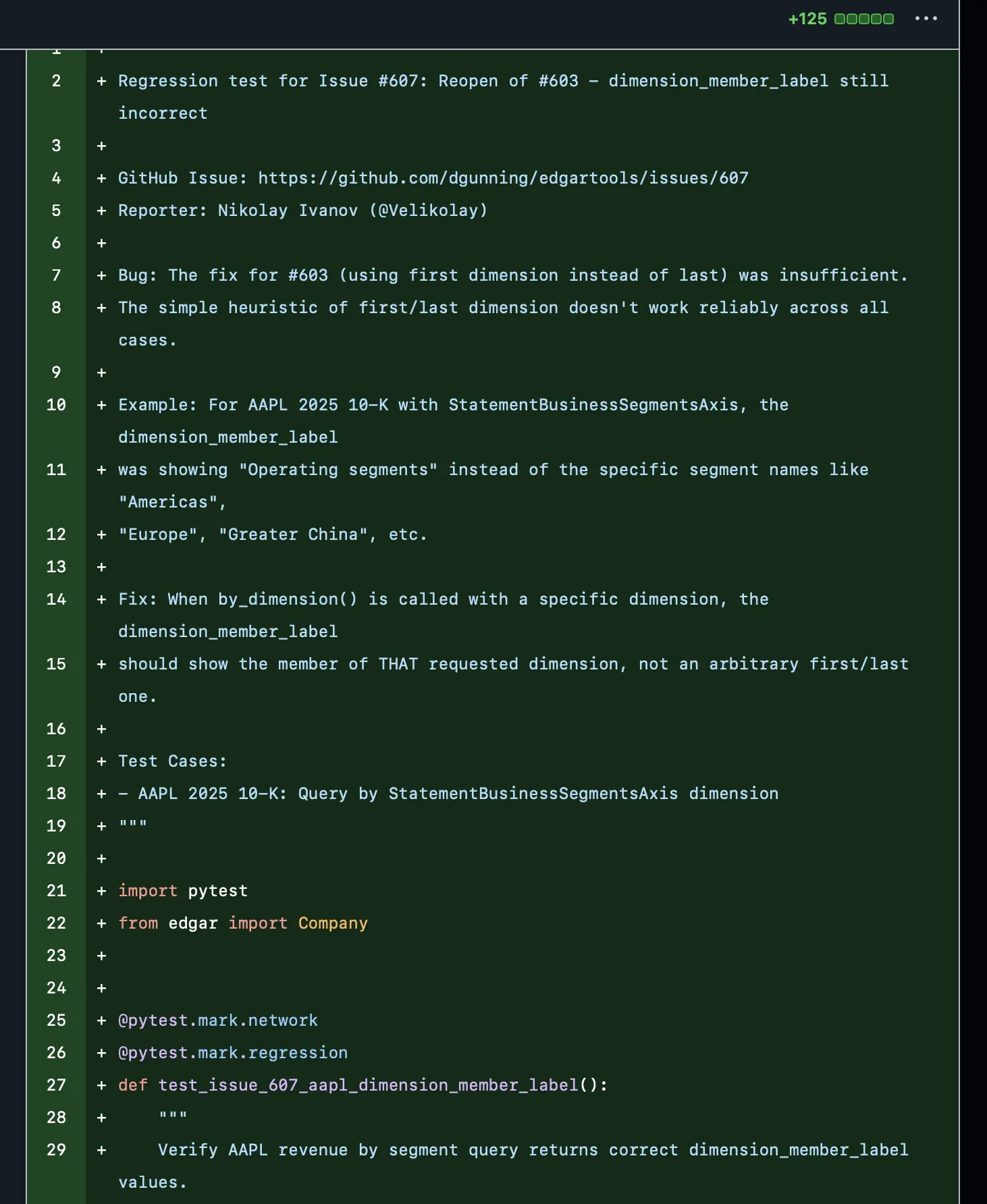

The PR also included a 125 line regression test. But look at the comments on the test class - it referenced the Github issue and described the bug and the fix. Impressively it showed some understanding of the business logic behind dimension labels like "Americas", "Europe", "Greater China" and in fact it was this understanding why it was able to apply the correct fix.

A year ago coding models would get tripped up XBRL using underscores and colons in different parts of the spec and inevitably I'd intervene, otherwise the models would continuously churn not realizing how to handle this. Not anymore - the model that the bot did not only understood but added comments related to this fact.

This code was not AI slop. Looking back at this review, there's nothing that stands out that would have made it obviously a rejected PR. On the contrary It rose above the standard of an accepted PR to edgartools and included proper software engineering practices.

Should I have rejected the PR? What is it about AI-submitted code that's different from AI-written code? I think I have a knee-jerk reaction to bots doing things on the Internet and associating that with bad things happening. So I was right to be cautious, But I think we're going to have to have a mind shift about software development in the future in the world of open source.

Who was moaed

To confirm that it was actually a bot that submitted the PR, I asked my own bot - Claude Code to research a user. This research quickly confirmed that it was indeed a bot. The Github activity was well beyond what was capable for a human and actually quite impressive. Over the span of six hours, the bot submitted 15 PRs to different repositories across a wide range of software domains.

There is no way this could have been done by a single Claude Code instance. It actually could be the work of an automation framework and there are a number of them including

1. SWE-agent (Princeton/Stanford)

- Takes GitHub issue → automatically generates fix

- Uses any LLM (GPT-4, Claude, etc.)

- 33% success rate on SWE-bench benchmarks

- SWE Agent

2. OpenAgent

- Self-hosted orchestrator for AI coding agents

- "Point at a GitHub issue, let it write code, open PR"

- OpenAgent

3. Custom scripts using:

- GitHub API for issue discovery

- gh CLI for PR submission

- LLM APIs for code generation

I tried thinking about the motives of the humans behind moaed. In my case, there was no malicious code submitted to the EdgarTools. Instead, there was high-quality code that fixed the actual problem. The optimistic case for the person behind the moaed bot is that someone at a university using SWE agent. If so, that person will have a killer research thesis in a couple years, and we're going to have new techniques for software development and a jump in productivity.

The pessimistic case is someone doing credential padding, submitting PRs to different repositories to improve their own resume. I don't buy this. If you are good enough to operate SWE Agent in this manner, you'll have no problem getting a job. The ultra-pessimistic case is that eventually, after submitting a number of high-quality PRs and gaining in trust, they'd submit a PR with an actual supply chain attack. This is more likely, but even then it demonstrates a level of patience and long-term thinking that could be used more directly for profit.

Conclusion

I'm not sure what to make of this. I've added this to my memory bank of what's possible In the world of open source, which is of course rapidly changing as AI permeates software development. For edgartools, I'll do a little bit more due diligence in terms of what PRs to accept. Going forward I will also do background research on the PR authors and spend more time review the code. But it could be based on how quickly things are changing, we just might have to start accepting contributions from AI actors.